About me

I am a Ph.D. candidate in Computer Science at the CVML Group of the National University of Singapore (NUS), working under the guidance of Prof. Angela Yao. My research focuses on the exciting challenge of 3D generation and editing.

I hold a Master of Computing degree from NUS, where I deepened my passion for exploring the intersection of AI and computer vision. Prior to this, I studied Information Management and Information Systems at the Beijing Institute of Technology, where I developed a strong foundation in technology and problem-solving.

Actively seeking industrial internship opportunities

Publications

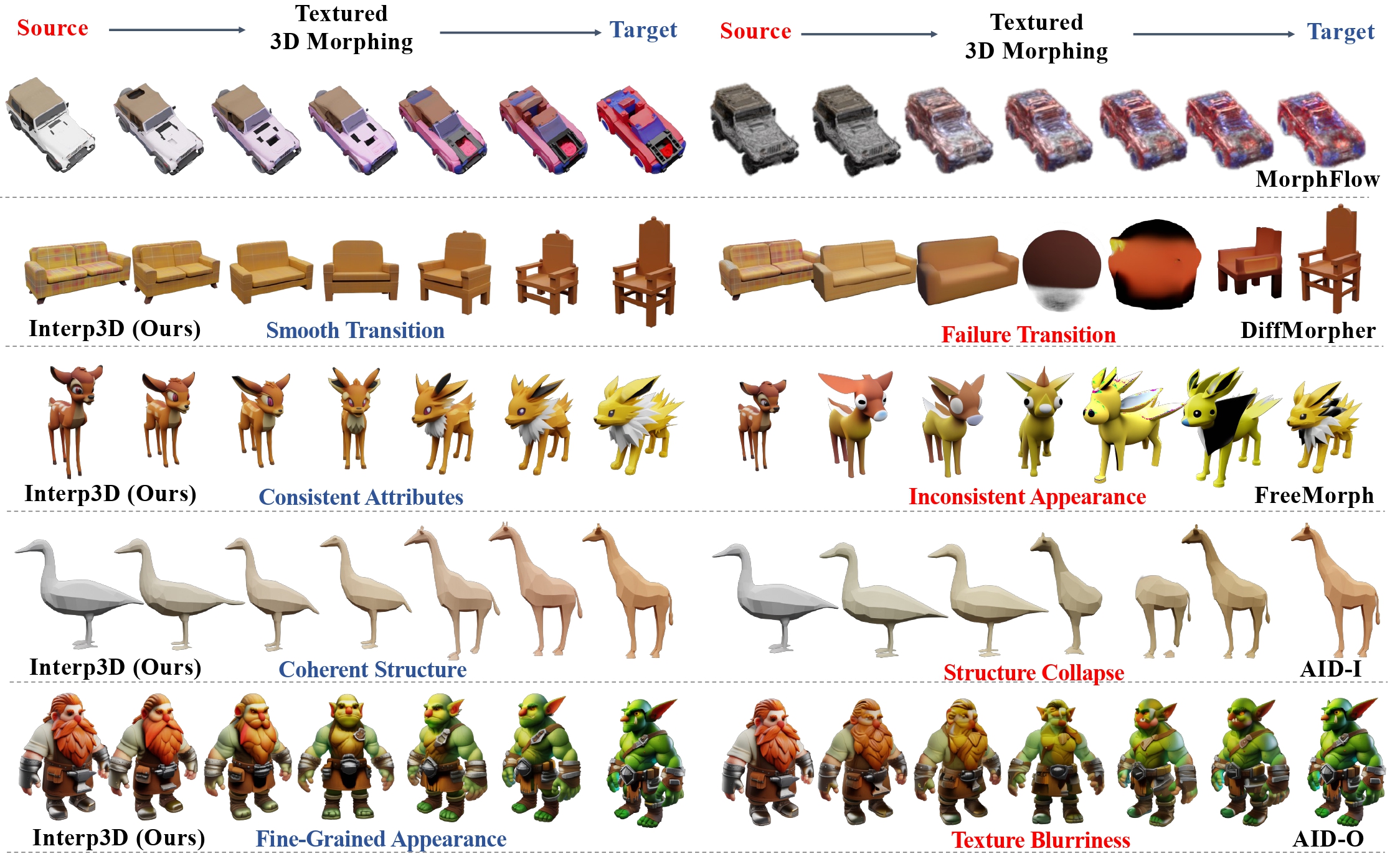

Interp3D: Correspondence-Aware Interpolation for Generative Textured 3D Morphing

Xiaolu Liu, Yicong Li, Qiyuan He, Jiayin Zhu, Wei Ji, Angela Yao, Jianke Zhu

The Fourteenth International Conference on Learning Representations (ICLR), 2026.

AnchorDS: Anchoring Dynamic Sources for Semantically Consistent Text-to-3D Generation

Jiayin Zhu, Linlin Yang, Yicong Li, Angela Yao

The 40th Annual AAAI Conference on Artificial Intelligence, 2026.

InstructHumans: Editing Animatable 3D Human Textures with Instructions

Jiayin Zhu, Linlin Yang, Angela Yao

IEEE Transactions on Multimedia (TMM), 2026.

3D Magic Mirror: Clothing Reconstruction from a Single Image via a Causal Perspective

Zhedong Zheng, Jiayin Zhu, Wei Ji, Yi Yang, Tat-Seng Chua

npj Artificial Intelligence, 2026.

HiFiHR: Enhancing 3D Hand Reconstruction from a Single Image via High-Fidelity Texture

Jiayin Zhu, Zhuoran Zhao, Linlin Yang, Angela Yao

German Conference on Pattern Recognition (GCPR), 2023.

Services

- Teaching Asistant

- CS2100 Computer Organisation in Sem 1 AY2023/2024

- CS4243 Computer Vision and Pattern Recognition in Sem 2 AY2023/2024

- CS4243 Computer Vision and Pattern Recognition in Sem 2 AY2025/2026

- Reviewer: NeurIPS, ECCV, AAAI, ICLR, CVPR, ICML

Others

Rate my coding activity (?)

Rate my coding activity

0 think it's productive, 0 suggest more coding